Dijkstra's shortest path -> Lagrangian mechanics? I

Context

Have you seen the latest news? These are now old, but anyways...

These guys managed to improve the Ford-Fulkerson algorithm to a O(n(1+e)) time complexity. Pretty good.

While reading the paper, I came across this:

In their breakthrough work on solving Laplacian systems and computing electrical flows, Spielman and Teng [ST04] introduced the idea of combining continuous optimization primitives with graph-theoretic constructions for designing flow algorithms. This is often referred to as the Laplacian Paradigm.

Emphasis mine.

The strange thing is that by using a continuous approach to a discrete graph problem, they managed to get these new results.

Why is this puzzling?

Usually, at your average CS/Data+Algs course the teacher at some point talks about the problem of finding the shortest path between two points. This can be solved pretty well by using D's algorithm, by iterating and updating some data structures.

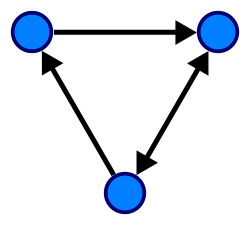

D's algorithm works on graphs by using greedy iteration. Graphs are discrete units:

In physics, the shortest path problem is related to Lagrangian1 mechanics. Basically, nature optimizes energy use if an action is conservative.

- By doing fancy differentiation on a higher order function (the Lagrangian), and setting this fancy derivative to 0 (aka finding the minima or maxima), you can find the shortest path Y, from a to b.

- Differentiation works only in functions, which are continuous.

So, maybe there is a missing link between the discrete graph approach, and the continuous functional one. If you find it, Dijkstra's algorithm becomes just another calculus optimization problem. Then, you can fine-tune it to a lower O() complexity.